Partner login

Activity Recognition Challenge - Dataset

-

10th of November 2011: Challenge dataset with complete labels released. Follow this link.

-

12th of August 2011: an issue arose in S1-Drill.dat in the 10th of August update. Please download the new version.

-

10th of August 2011: challenge dataset for task A, B1, B2 has been re-issued! Please download the new version.

Opportunity dataset

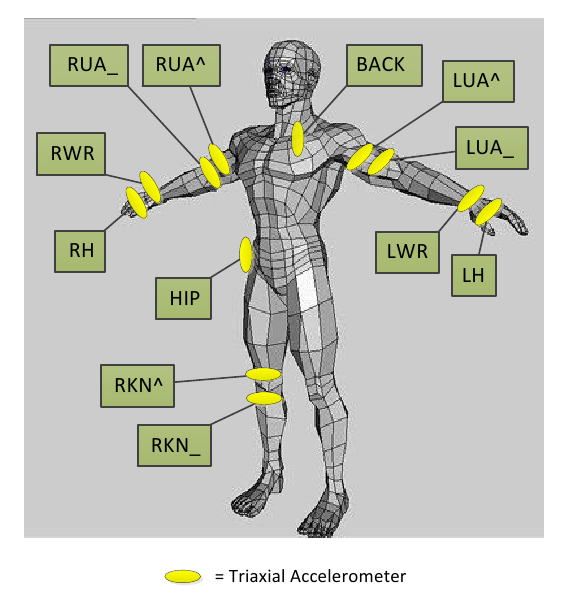

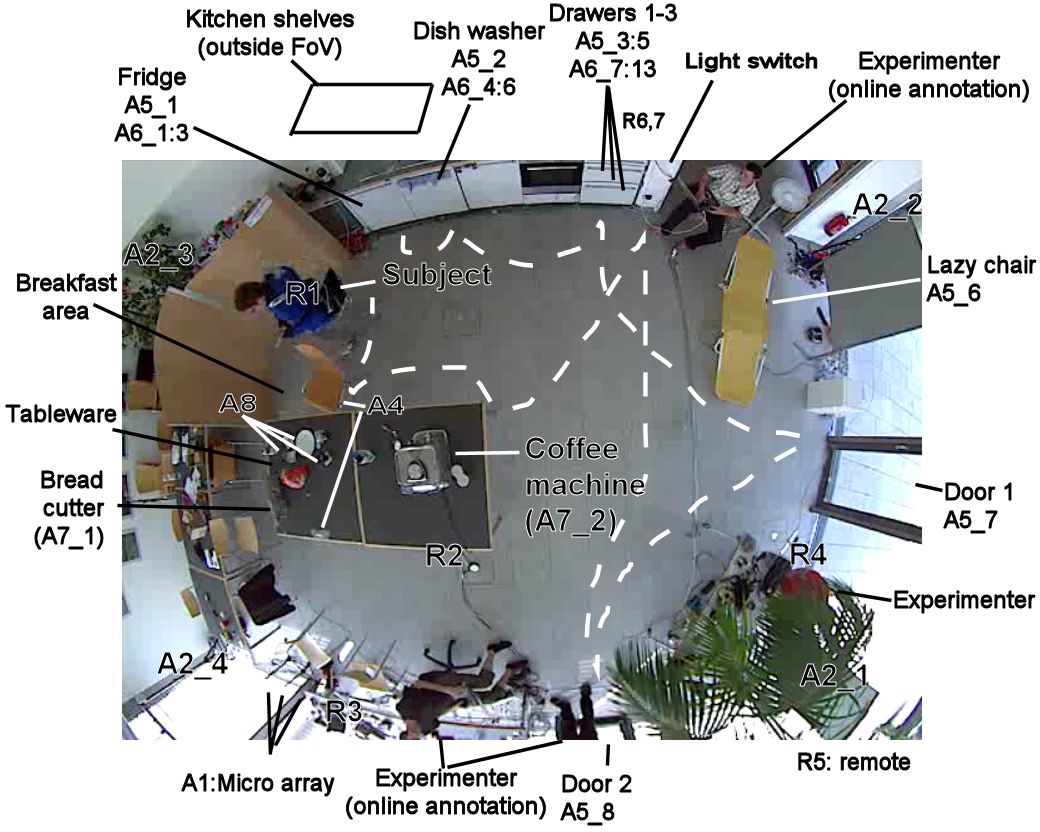

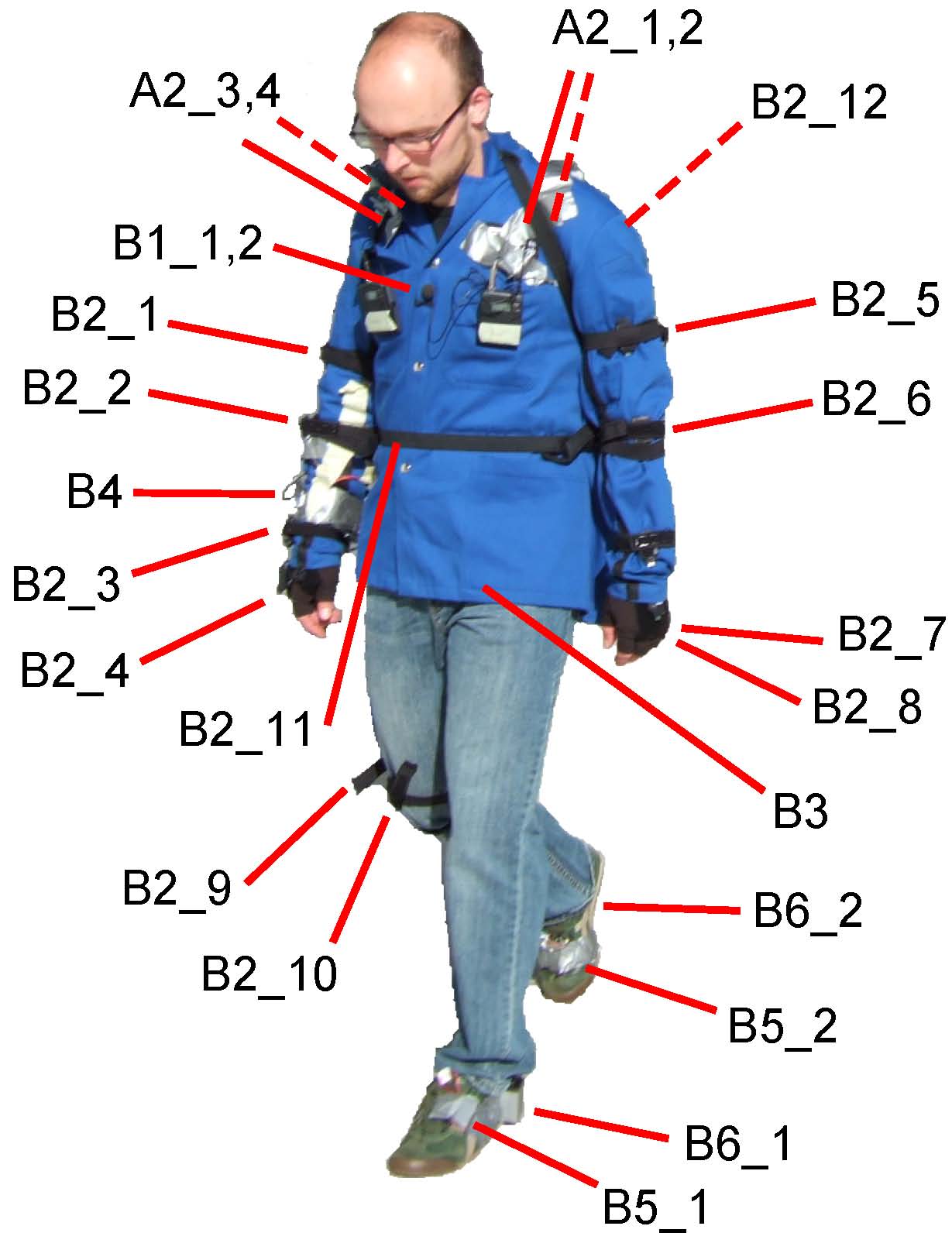

For this challenge we use a subset of the Opportunity dataset. This database contains naturalistic human activities recorded in a sensor rich environment: a room simulating a studio flat with kitchen, deckchair, and outdoor access where subjects performed daily morning activities [1,2,3]. Two types of recording sessions were performed: Drill sessions where the subject performs sequentially a pre-defined set of activities and "dayly living activities" runs (ADL) where he executes a high level task (wake up, groom, prepare breakfast, clean) with more freedom about the sequence of individual atomic activities. We deploy 15 networked sensor systems of different origins (proprietary and custom, from different manufacturers or universities). It comprises 72 sensors of 10 modalities, integrated in the environment, in objects, and on the body . The full setup including both ambient and on-body sensors is illustrated in the figures below. It consists of an annotated dataset of complex, interleaved and hierarchical naturalistic activities, with a particularly large number of atomic activities (around 30’000), collected in a very rich sensor environment.

Data was manually labelled during the recording and later reviewed by at least two different persons based on the video recording. The full dataset will be made available shortly after the end of the challenge.

When using this database, please refer to the two papers listed below.

|

|

References

- Roggen, D. et al. Collecting complex activity data sets in highly rich networked sensor environments Seventh International Conference on Networked Sensing Systems, 2010

- Lukowicz, P. et al. Recording a complex, multi modal activity data set for context recognition 1st Workshop on Context-Systems Design, Evaluation and Optimisation at ARCS, 2010, 2010

Challenge dataset

The data used for the challenge is composed of the recordings of 4 subjects including only on-body sensors; for each subject we provide 5 unsegmented recordings. We also provide labels for the full recording of one of the subjects. For the remaining subjects, labels are available for four of the sessions while the last two session will be used by the organizers to evaluate the performance of the contributed methods. The labels for these recordings will be made public after the submission deadline date. Labelled data can be used by participants at their own discretion to tune their methods.

The following table shows an illustration of the provided data, where (●) denotes sessions for which the participants receive the labels.

|

|

S1

|

S2

|

S3

|

S4*

|

|

Drill

|

●

|

●

|

●

|

●

|

|

ADL 1

|

●

|

●

|

●

|

●

|

|

ADL 2

|

●

|

●

|

●

|

●

|

|

ADL 3

|

●

|

●

|

●

|

●

|

|

ADL 4

|

●

|

|

|

|

|

ADL 5

|

●

|

|

|

|

(*) Subject 4 is used to assess robustness to noise. For such reason rotational noise will be added to the data of this subject. See Task C for further details

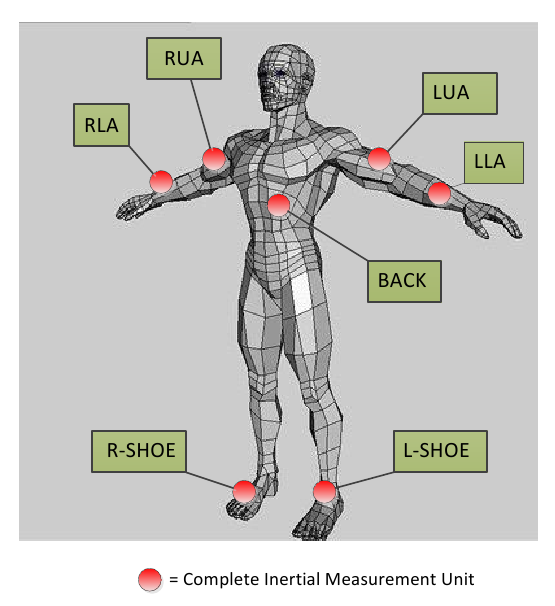

Sensor location

The image below show the location of the on-body sensors used in the challenge. The subjet wore a custom-made motion jacket composed of 5 commercial RS485-networked XSense inertial measurement units. In addition to 12 Bluetooth acceleration sensors on the limbs and commercial InertiaCube3 inertial sensors located on each foot.

| Inertial sensors | Accelerometers |

|

|

Contact

Do not hesitate to contact the consortium.

About

We develop opportunistic activity recognition systems: goal-oriented sensor assemblies spontaneously arise and self-organize to achieve a common activity and context recognition. We develop algorithms and architectures underlying context recognition in opportunistic systems.

Latest news

Newsletter

Subscribe to our newsletter for regular project updates.